What is it about?

It is an Incremental neural architecture for supervised learning. It uses parallelly connected multi-layer perceptron network to do the same. It include a monitor perceptron to differentiate between new class (unseen class) data to seen data(already learnt). If data belongs to new class data then network extend itself to learn new class.

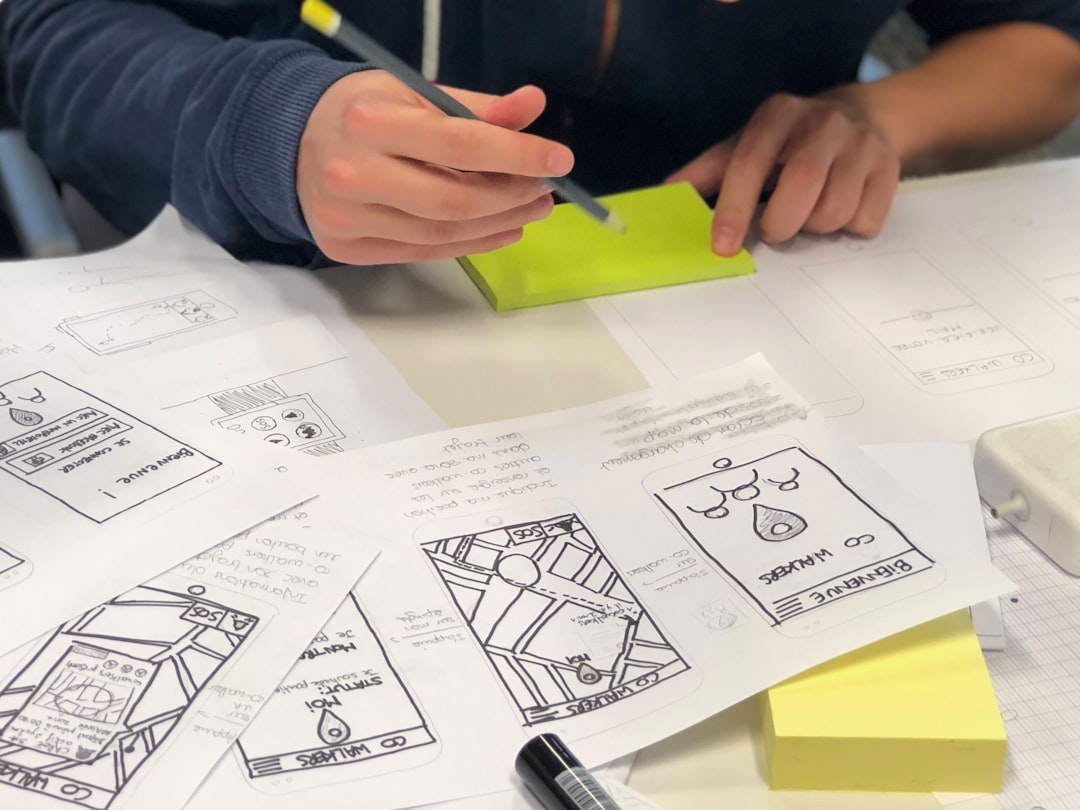

Featured Image

Why is it important?

There are many incremental architecture already exist but most of them work in two modes one is training mode and other is testing mode. If it is in training mode then it can accommodate data into it but if it is in testing mode then they classify new data into one of the existing classes which is not happening in case of INNAMP. Also it provide more tighter boundaries then other.

Perspectives

The goal of the paper is not only to get good classification accuracy but also to be able to address the following two issues that are central to incremental learning systems (a) The ability of the system to detect that a newly arrived sample may be from a class that it has not learnt yet and (b) The ability of the system to adapt itself to the new class without significantly changing the models of the classes that it has already learnt (i.e. addressing the stability – plasticity dilemma).

Sharad Gupta

Indian Institute of Information Technology, Allahabad

Read the Original

This page is a summary of: INNAMP: An incremental neural network architecture with monitor perceptron, AI Communications, June 2018, IOS Press,

DOI: 10.3233/aic-180767.

You can read the full text:

Contributors

The following have contributed to this page