What is it about?

The work delves into area efficient and fast implementations of adders, multipliers, and dot-product accumulators tailored for AMD Versal FPGAs. It introduces a generator that assembles arithmetic operators from compact building blocks. These building blocks, finely tuned to the Versal fabric's characteristics, are small yet highly efficient arithmetic circuits. The paper proposes new circuits of such kind, elaborates on construction heuristics, and details their enhancements through two case studies.

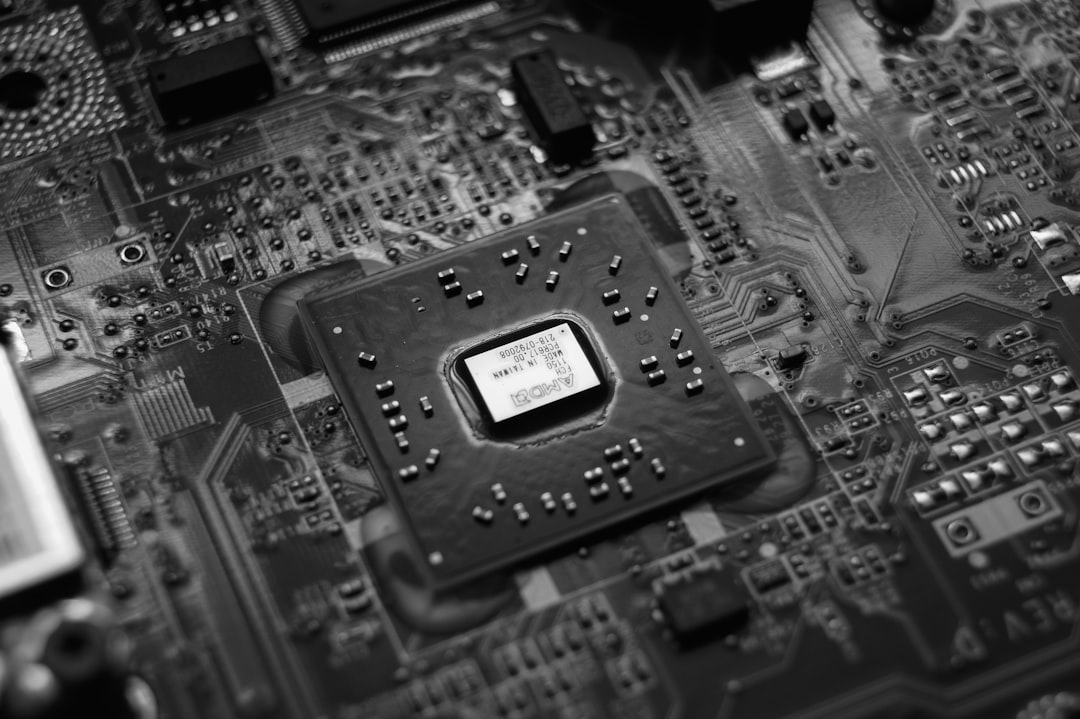

Featured Image

Photo by Ludde Lorentz on Unsplash

Why is it important?

Neural network inference has demonstrated remarkable efficacy even under extensive parameter quantization. The necessary arithmetic precision within a quantized neural network can vary across layers, weights, and activations. FPGAs present an optimal platform for custom-precision arithmetic, offering substantial resource savings with highly quantized operators. Therefore, highly efficient implementations further heighten the allure of FPGAs for inference solutions significantly.

Perspectives

The proposed approach dramatically diminishes the footprint of custom-precision FPGA arithmetic, leading to notable advancements in area efficiency. These improvements translate into substantial performance enhancements for compute-intensive tasks such as neural network inference and digital signal processing applications. We are presently integrating our approach into our fabric AI inference solution FINN.

Konstantin Hoßfeld

Advanced Micro Devices Inc

Read the Original

This page is a summary of: High-Efficiency Compressor Trees for Latest AMD FPGAs, ACM Transactions on Reconfigurable Technology and Systems, February 2024, ACM (Association for Computing Machinery),

DOI: 10.1145/3645097.

You can read the full text:

Contributors

The following have contributed to this page