What is it about?

Introducing "PArtNNer: Platform-Agnostic Adaptive Edge-Cloud DNN Partitioning for Minimizing End-to-End Latency", a novel solution aimed at improving the efficiency of artificial intelligence (AI) applications, particularly in scenarios where real-time responsiveness is crucial. In simple terms, PArtNNer addresses the challenge of reducing delays or "latency" in AI tasks by smartly dividing the workload between small devices like smartphones, smartwatches, drones and powerful computers in the cloud. Imagine your smart speaker responding to your voice commands faster or self-driving cars making split-second decisions with minimal delay - that's the kind of improvement PArtNNer aims to bring to AI-driven technologies we use every day. PArtNNer stands out for its platform-agnostic nature, meaning it can work seamlessly across different types of devices and computing environments. This versatility ensures that whether you're using a smartphone, VR glasses, or a desktop computer, PArtNNer can adapt and optimize the AI tasks accordingly. Additionally, PArtNNer employs adaptive partitioning, a dynamic approach that continuously evaluates factors such as task complexity and available resources to determine the most efficient distribution of workload between these devices and cloud servers. This means that as conditions change, PArtNNer can adjust in real-time, ensuring optimal performance and minimal latency for AI applications. Through its innovative methodology, PArtNNer ensures that AI tasks are processed in the most efficient way possible, regardless of the computing resources available, making it a promising advancement in the field of AI optimization.

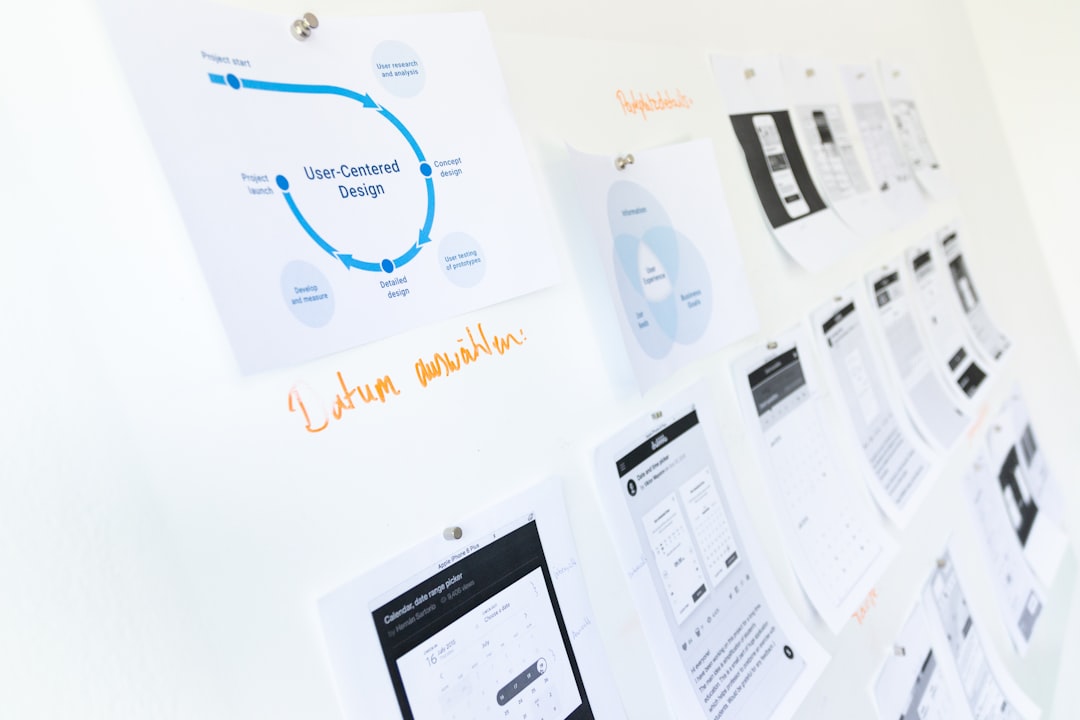

Featured Image

Photo by Igor Omilaev on Unsplash

Why is it important?

PArtNNer's groundbreaking approach in reducing latency for AI tasks holds immense significance in today's AI-driven landscape. By dynamically partitioning complex AI workloads between edge devices and cloud servers, PArtNNer not only minimizes end-to-end latency but also enhances overall performance and reliability, crucial for real-time applications like healthcare diagnostics, industrial automation, and autonomous vehicles. Its platform-agnostic adaptability ensures seamless integration across diverse environments, offering efficiency gains and cost savings in AI deployment. For large language models (LLMs) like GPT-3, LLaMA, which demand substantial computational resources, PArtNNer's adaptive edge-cloud partitioning could democratize access and improve responsiveness, benefiting users worldwide. In essence, PArtNNer's impact extends beyond technological innovation, paving the way for more responsive, accessible, and cost-effective AI solutions in today's interconnected world.

Perspectives

Low latency and energy-efficient execution of DNN models across the edge and cloud are some of the most important challenges today. This paper provides a holistic end-to-end solution that is applicable across different types of edge devices and server nodes.

Arnab Raha

Intel Corp

Read the Original

This page is a summary of: PArtNNer: Platform-agnostic Adaptive Edge-Cloud DNN Partitioning for minimizing End-to-End Latency, ACM Transactions on Embedded Computing Systems, October 2023, ACM (Association for Computing Machinery),

DOI: 10.1145/3630266.

You can read the full text:

Contributors

The following have contributed to this page