What is it about?

The spread of misinformation is not a new phenomenon, but online platforms have made it more pervasive than ever before. Computers running machine learning algorithms to automatically detect misinformation can help combat the sheer quantity of false information, but how do we know when we can trust their assessment? We have introduced an automated fact-checking algorithm, E-BART, that has competitive accuracy and can provide a human-readable justification for its decision.

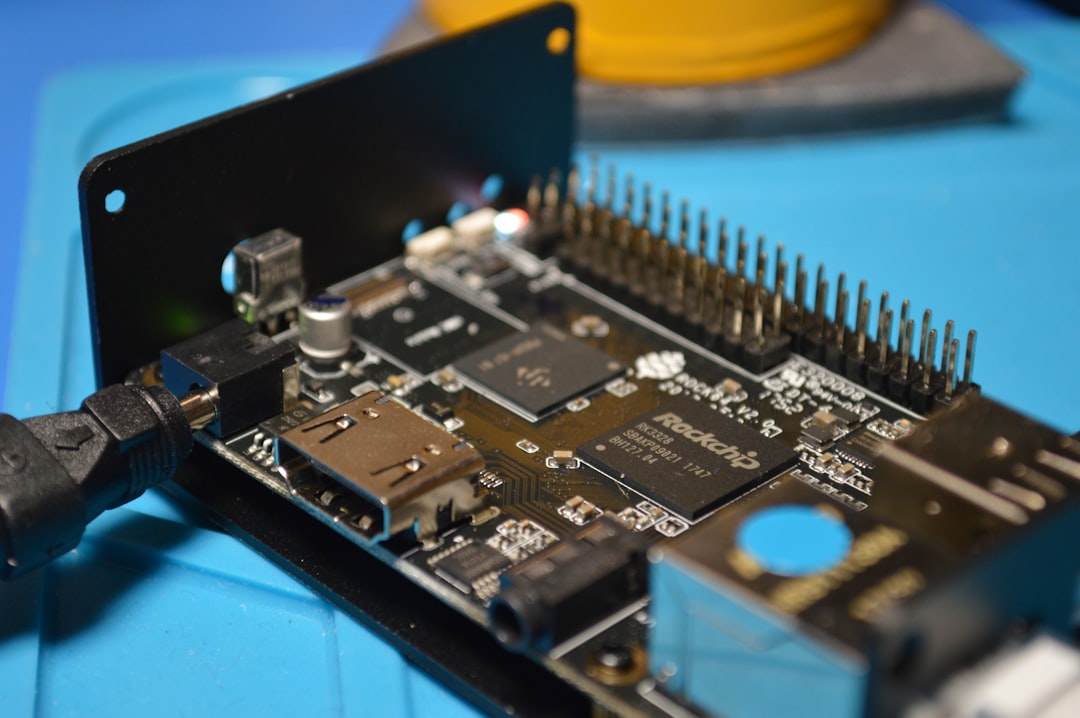

Featured Image

Photo by Agence Olloweb on Unsplash

Why is it important?

Most explainable automated fact-checking algorithms utilise two separate components: one for computing the fact-check decision and one for generating the explanation. Our solution utilises a single neural network model that can perform both tasks simultaneously. In addition to this, we performed human evaluations on the model's output. The main findings were that: a) the model's accuracy was competitive with the state of the art, b) the fact-check decision and explanation were more consistent in our model compared to solutions that compute these separately, and c) the model's output made humans more skeptical about what they read online.

Perspectives

Working on this article was extremely rewarding. The area of automated fact-checking is still young and there is still so much to discover. I hope that this paper paves the way for more accurate, explainable fact-check algorithms in the future, and that eventually the spread of misinformation online will be reduced.

Erik Brand

University of Queensland

Read the Original

This page is a summary of: A Neural Model to Jointly Predict and Explain Truthfulness of Statements, Journal of Data and Information Quality, July 2022, ACM (Association for Computing Machinery),

DOI: 10.1145/3546917.

You can read the full text:

Resources

Contributors

The following have contributed to this page