What is it about?

It aims to assess the reliability of DL models' prediction with another model and improve DL models' predictions with generated uncertainty quantification results. This paper is about a new method called NIRVANA for prediction validation based on uncertainty metrics. The method is designed to help with the uncertainty inherent in both CPSs and deep learning. The researchers first employed prediction-time Dropout-based Neural Networks to quantify uncertainty in deep learning models applied to CPS data. Second, such quantified uncertainty was taken as the input to predict wrong labels using a support vector machine, with the aim of building a highly discriminating prediction validator model with uncertainty values.

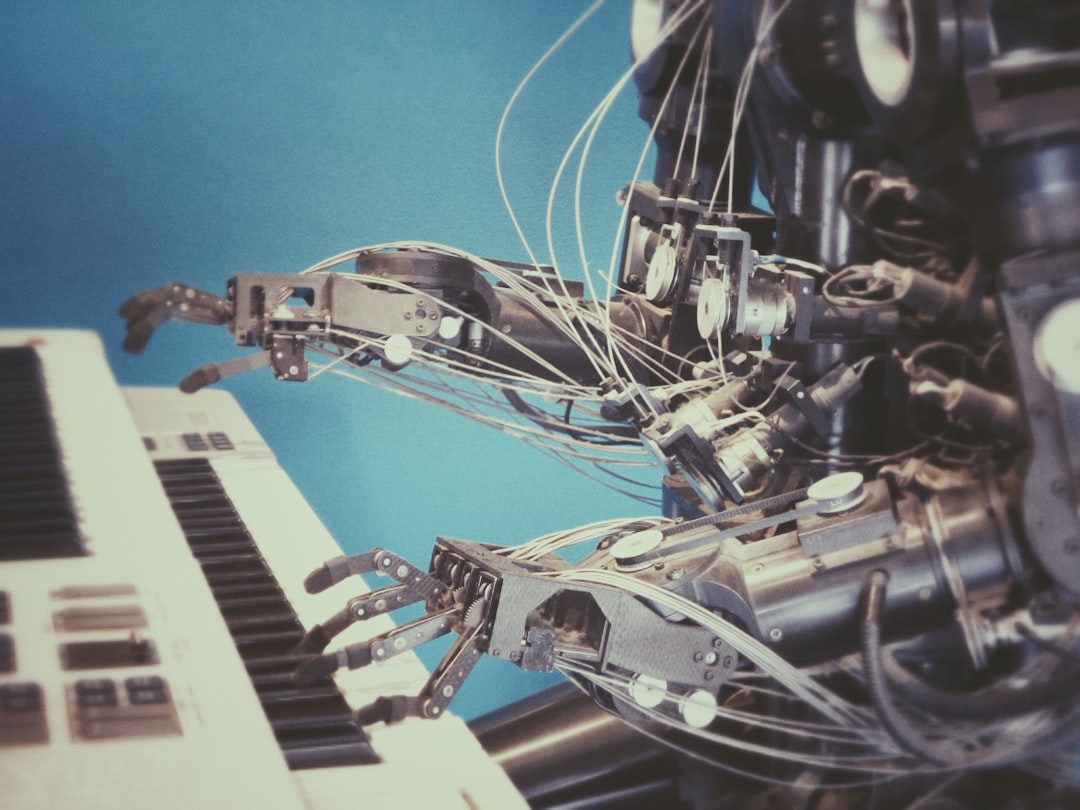

Featured Image

Photo by Possessed Photography on Unsplash

Why is it important?

Deep learning models are often employed to model data from cyber-physical systems (CPSs). However, data from CPSs are often uncertain, and deep learning models are also uncertain. If uncertainty is not handled adequately, it can lead to unsafe CPS behavior.

Read the Original

This page is a summary of: Uncertainty-aware Prediction Validator in Deep Learning Models for Cyber-physical System Data, ACM Transactions on Software Engineering and Methodology, October 2022, ACM (Association for Computing Machinery),

DOI: 10.1145/3527451.

You can read the full text:

Contributors

The following have contributed to this page