What is it about?

The paper describes how to apply mutation testing on Spark Big Data programs. It defines mutation operators formally and reports on experimental validation. In TRANSMUT, faults modelled considering - transformation replacements - modifications in input parameters - inclusion & exclusion of transformations TRANSMUT defines17 mutation operators for Spark programs - modifications are done changing the dataflow (DAG) - transformations types consistency ensured

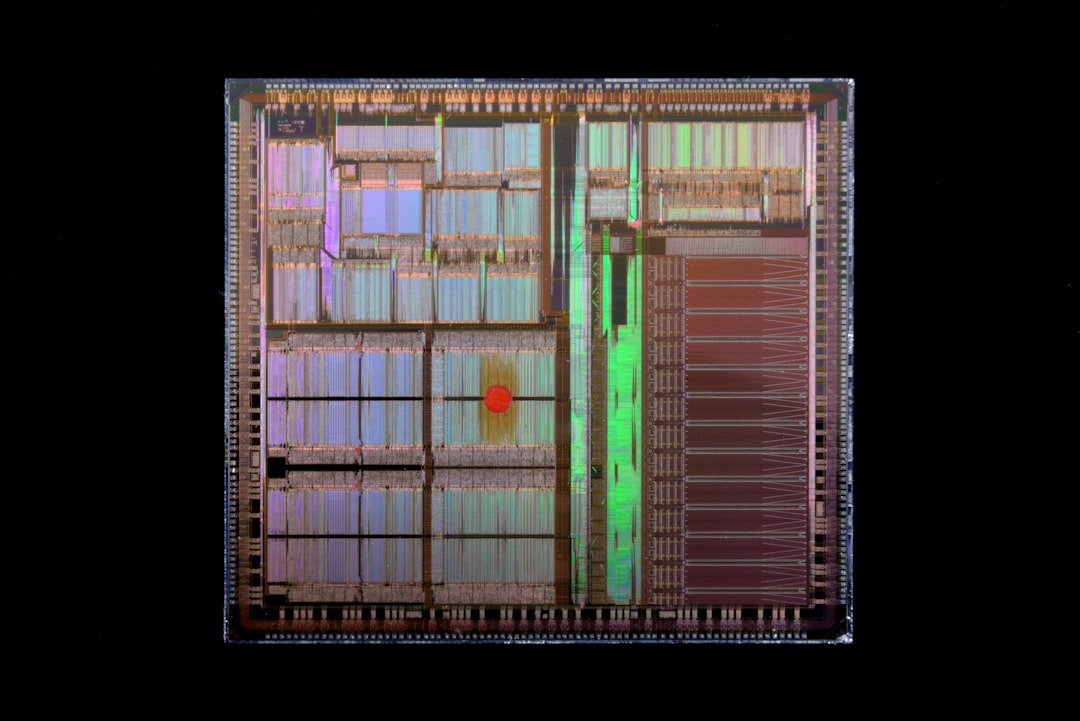

Featured Image

Photo by Luke Chesser on Unsplash

Why is it important?

Testing Big Data Processing Spark Programs avoids big losses in companies due to failures in big data processing programs. Before production: testing is a way of dealing with this problem. Big Data programs testing is still undergoing thus, TRANSMUT is an original tool addressing this open issue in academia and industry.

Perspectives

Provide a formal and running solution for testing both functional and non-functional faults within Big Data processing programs and ensure that they exploit the facilities of SPARK on top of specific target architectures in the best conditions. Automatic testing of functional and non-functional faults will ease tuning Big Data processing solutions and reduce the associated time to market.

Genoveva VARGAS-SOLAR

CNRS

Read the Original

This page is a summary of: TRANSMUT‐Spark: Transformation mutation for Apache Spark, Software Testing Verification and Reliability, February 2022, Wiley,

DOI: 10.1002/stvr.1809.

You can read the full text:

Contributors

The following have contributed to this page